Abstract

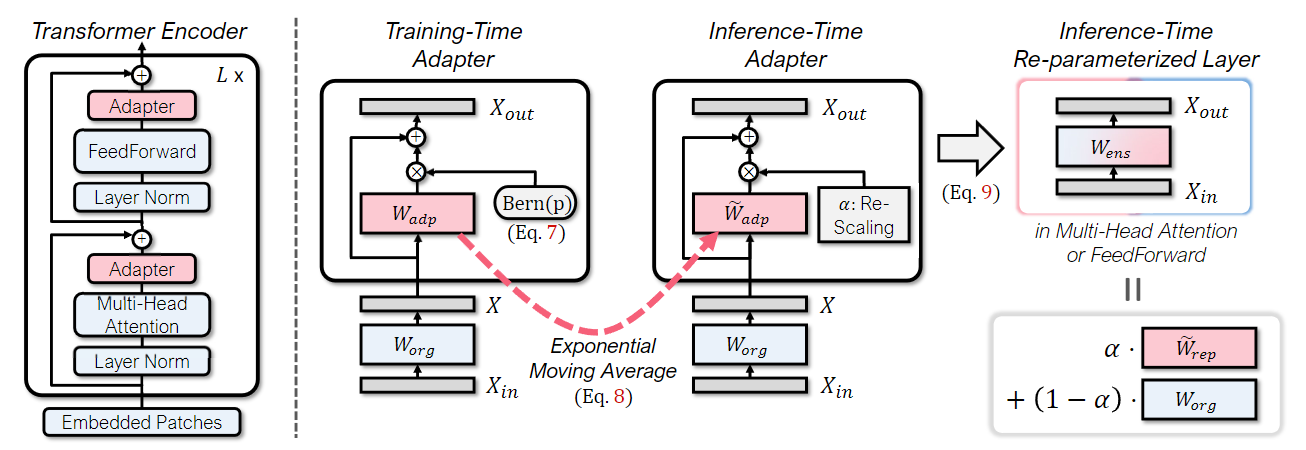

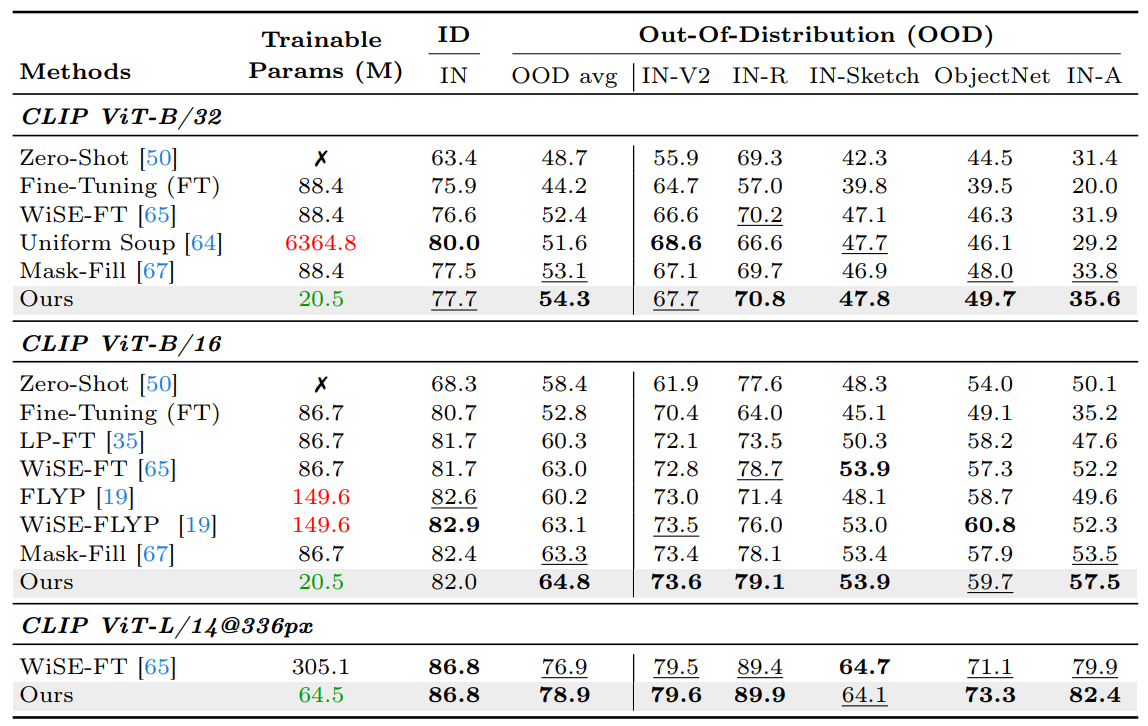

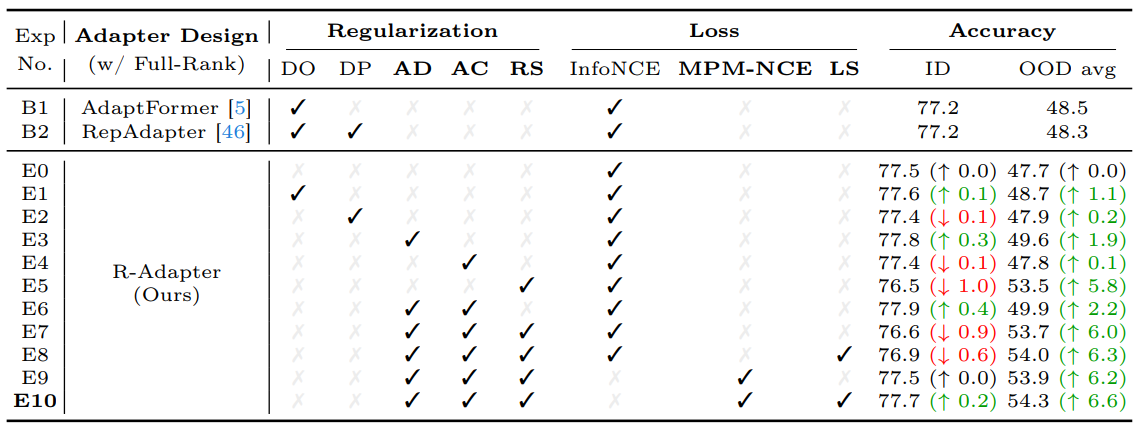

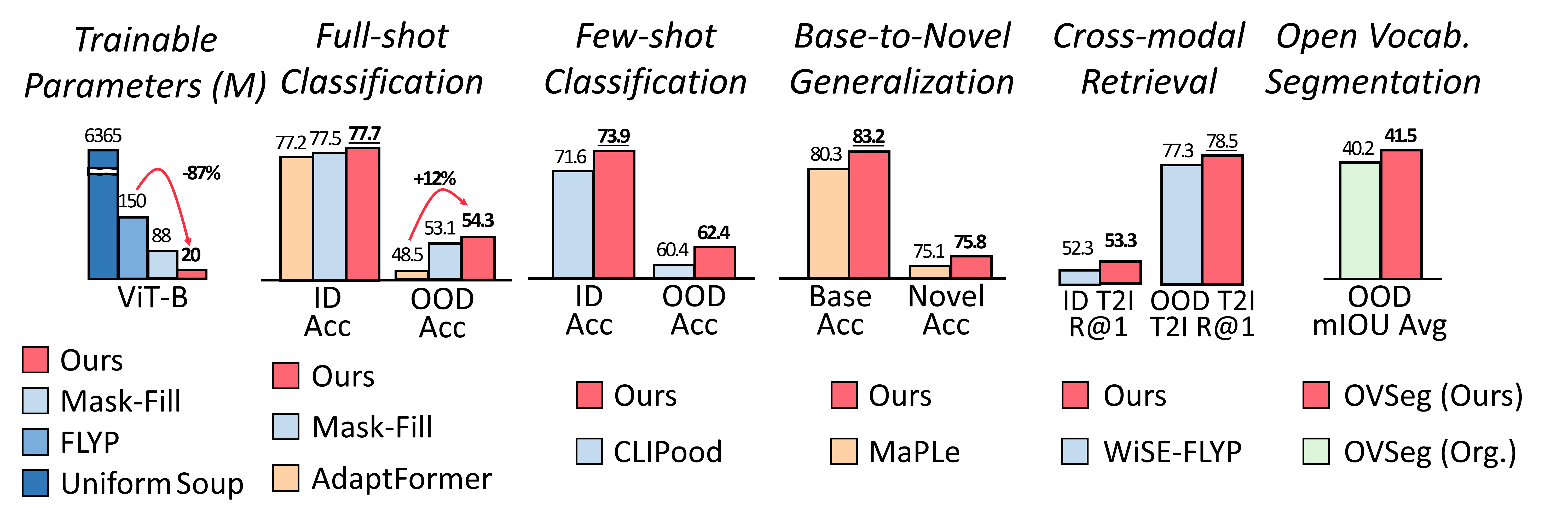

Large-scale image-text pre-trained models enable zero-shot classification and provide consistent accuracy across various data distributions. Nonetheless, optimizing these models in downstream tasks typically requires fine-tuning, which reduces generalization to out-ofdistribution (OOD) data and demands extensive computational resources. We introduce Robust Adapter (R-Adapter), a novel method for finetuning zero-shot models to downstream tasks while simultaneously addressing both these issues. Our method integrates lightweight modules into the pre-trained model and employs novel self-ensemble techniques to boost OOD robustness and reduce storage expenses substantially. Furthermore, we propose MPM-NCE loss designed for fine-tuning on vision-language downstream tasks. It ensures precise alignment of multiple image-text pairs and discriminative feature learning. By extending the benchmark for robust fine-tuning beyond classification to include diverse tasks such as cross-modal retrieval and open vocabulary segmentation, we demonstrate the broad applicability of R-Adapter. Our extensive experiments demonstrate that R-Adapter achieves state-of-the-art performance across a diverse set of tasks, tuning only 13% of the parameters of the CLIP encoders.

Figure 1. We present Robust Adapter (R-Adapter), which combines the strengths of robust fine-tuning and parameter-efficient fine-tuning (PEFT). R-Adapter improves parameter and memory efficiency compared to existing robust fine-tuning (e.g., Maskfill, ModelSoup) while being more robust compared to existing PEFT (e.g., AdaptFormer, MaPLe). Unlike most of existing robust fine-tuning, our method can apply to a wide range of tasks, and consistently outperforms current best methods on diverse tasks in both in-distribution (ID) and out-of-distribution (OOD).